Spark Download Mac Integrate Jupyter

- Install Jupyter On Mac

- Spark Download Mac Integrate Jupiter Key

- Jupyter Spark Kernel

- Spark Download Mac Integrate Jupiter Fl

- Jupyter Notebook Mac Os

Integrate Spark and Jupyter Notebook Install Python Env through pyenv, a python versioning manager. Pyenv install 3.6.7 # Set Python 3.6.7 as main python interpreter pyenv global 3.6.7 # Update new python source /.zshrc # Update pip from 10.01 to 18.1 pip install -upgrade pip. Jupyter is a tool that allows data scientists to record their complete analysis process, much in the same way other scientists use a lab notebook to record tests, progress, results, and conclusions.

Apache Spark is a powerful open-source cluster-computing framework. Compared to Apache Hadoop, especially Hadoop MapReduce, Spark has advantages such as speed, generality, ease of use, and interactivity, etc. For Python developers like me, one fascinating feature Spark offers is to integrate Jupyter Notebook with PySpark, which is the Spark Python API. Unlike Hadoop MapReduce, where you have to first write the mapper and reducer scripts, and then run them on a cluster and get the output, PySpark with Jupyter Notebook allows you to interactively write codes and obtain output right away.

In the developing phase, before distributing the computing work onto an online cluster, it’s useful to have a workspace on hand so that we could first write and test codes or get some preliminary results. The workspace could be our local laptop, or some low-cost online cloud service like Amazon Web Service or Google Cloud Platform. While we can install and configure Spark and Jupyter Notebook on Mac by running a Linux instance via VirtualBox, which is probably the most straightforward way. It annoys me a lot because my Mac only has 2 cores and 8G memory, the long-waiting setup and dramatic performance deduction finally depleted my patience and led me to turning to an online cloud service.

I have used both AWS and Google Cloud for some projects. I don’t want to compare the two here since they are both excellent online cloud service providers which offer tremendous online cloud products. In this post I’ll show you how to install Spark and Jupyter Notebook and get them ready for use on a Google Cloud Compute Engine Linux instance. It should be similar if you decide to use AWS EC2 instance instead.

Prerequisite

Before we go ahead, I assume you already have some experience with Google Cloud Platform as well as Jupyter Notebook. This article is not a primer for those starters with no or little online cloud service and Jupyter Notebook experience.

Install and set up

1. Set up a Google Cloud Compute Engine instance

The first step is to create an Compute Engine Linux instance, make sure you select f1-micro as the machine type, as this is the only free-tier machine type provided by Google Cloud. Check Allow HTTP traffic and Allow HTTPS traffic, as the Jupyter Notebook will be accessed via the browser.

2. Install supporting packages and tools:

Connect to the Compute Engine Linux instance via ssh:

Install pip, which is a Python package manager:

Install Jupyter:

Intsall Java runtime environment, since Spark is written in Scala, and Scala is run on JVM:

Install Scala:

Install py4j library, which connects Python to Java:

3. Install Spark library

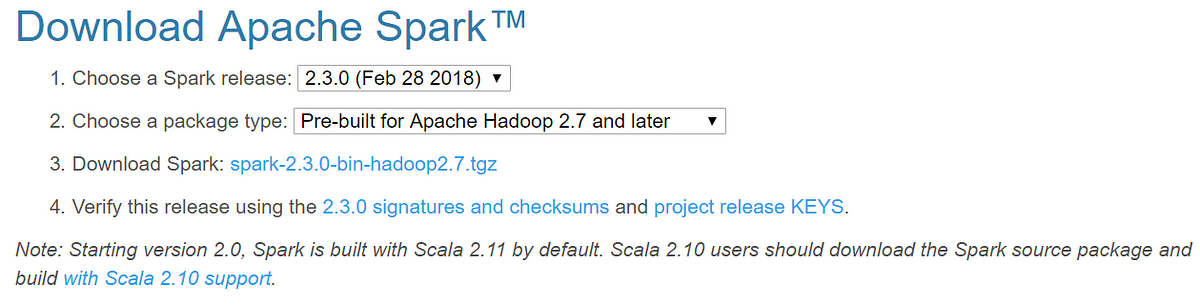

With all the above-mentioned tools and packages installed, next we could install the actual Spark library. We go to the Apache Spark download webpage, select the latest Spark release version, and click the tgz file, you will be redirected to a download page, copy the shown link address. Then go to terminal, and run the following command, paste the link address:

Unzip the tgz file:

Now, your spark library is unzipped into a directory called spark-2.2.1-bin-hadoop2.7. Get the path of this directory, we’ll use it later:

By default, PySpark is not on sys.path, but this doesn’t mean it can not be used as a regular library. We can address this by either symlinking PySpark into our site-packages, or adding PySpark to sys.path at runtime. The Python package called findspark does exactly the latter. Install findspark by running the following command:

4. Open a Jupyter Notebook server:

From the terminal, you’ll see something like this:

The shown URL is important, we’re gonna use this URL to login to the Jupyter Notebook. But before we do that, we need to create a login to the Compute Engine instance the second time.

5. Connect to the instance again

Install Jupyter On Mac

Do not close or exit the first terminal, open another terminal, type the following command:

This will redirect data from the specified local port, through the secure tunnel to the specified destinatin host and port.

6. Login to Jupyter Notebook from your local browser

Now, you are ready to login to the Jupyter Notebook from your local browser. Open a browser, either Safari or Chrome, copy and paste the URL mentioned in step 4. You should now login to the Jupyter Notebook.

7. Set up PySpark with Jupyter Notebook

In order to set up PySpark with Jupyter Notebook, we create a python notebook. Type the command:

The findspark module will symlink PySpark into site-packages directory. You need to specify the path of the Spark directory we unzipped in step 3.

Now, if you type import pyspark, PySpark will be imported. And you are now up to speed and good to play with Spark using Jupyter Notebook.

RELATED ARTICLES

Are you learning or experimenting with Apache Spark? Do you want to quickly use Spark with a Jupyter iPython Notebook and Pyspark, but don’t want to go through a lot of complicated steps to install and configure your computer? Are you in the same position as many of my Metis classmates: you have a Linux computer and are struggling to install Spark? One option that allows you to get started quickly with writing Python code for Apache Spark is using Docker containers. Additionally, using this approach will work almost the same on Mac, Windows, and Linux.

Spark Download Mac Integrate Jupiter Key

Curious how? Let me show you!

According to the official Docker website:

“Docker containers wrap a piece of software in a complete filesystem that contains everything needed to run: code, runtime, system tools, system libraries – anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.”

It also helps to understand how Docker “containers” relate (somewhat imperfectly) to shipping containers.

In this tutorial, we’ll take advantage of Docker’s ability to package a complete filesystem that contains everything needed to run. Specifically, everything needed to run Apache Spark. You’ll also be able to use this to run Apache Spark regardless of the environment (i.e., operating system). That means you’ll be able to generally follow the same steps on your local Linux/Mac/Windows machine as you will on a cloud virtual machine (e.g., AWS EC2 instance). Pretty neat, huh?

First you’ll need to install Docker. Follow the instructions in the install / get started links for your particular operating system:

- Mac:https://docs.docker.com/docker-for-mac/

- Windows:https://docs.docker.com/docker-for-windows/

- Linux:https://docs.docker.com/engine/getstarted/

Note: On Linux, you will get a Can't connect to docker daemon. error if you don’t use sudo before any docker commands. So you don’t have to sudo each time you run a docker command, I highly recommend you add your user (ubuntu in the example below) to the docker user group. See “Create a Docker group” for more info.

Make sure to log out from your Linux user and log back in again before trying docker without sudo.

We’ll use the jupyter/pyspark-notebook Docker image. The great thing about this image is it includes:

- Apache Spark

- Jupyter Notebook

- Miniconda with Python 2.7.x and 3.x environments

- Pre-installed versions of pyspark, pandas, matplotlib, scipy, seaborn, and scikit-learn

Create a new folder somewhere on your computer. You’ll store the Jupyter notebooks you create and other Python code to interact with Spark in this folder. I created my folder in my home directory as shown below, but you should be able to create the folder almost anywhere (although I would avoid any directories that require elevated permissions).

To run the container, all you need to do is execute the following:

Jupyter Spark Kernel

What’s going on when we run that command?

The -d runs the container in the background.

The -p 8888:8888 makes the container’s port 8888 accessible to the host (i.e., your local computer) on port 8888. This will allow us to connect to the Jupyter Notebook server since it listens on port 8888.

The -v $PWD:/home/jovyan/work allows us to map our spark-docker folder (which should be our current directory - $PWD) to the container’s /home/joyvan/work working directory (i.e., the directory the Jupyter notebook will run from). This makes it so notebooks we create are accessible in our spark-docker folder on our local computer. It also allows us to make additional files such as data sources (e.g., CSV, Excel) accessible to our Jupyter notebooks.

The --name spark gives the container the name spark, which allows us to refer to the container by name instead of ID in the future.

The final part of the command, jupyter/pyspark-notebook tells Docker we want to run the container from the jupyter/pyspark-notebook image.

For more information about the docker run command, check out the Docker docs.

Dec 19, 2018. Bloons tdx.

The jupyter/pyspark-notebook image automatically starts a Jupyter Notebook server.

Open a browser to http://localhost:8888 and you will see the Jupyter home page.

Once you have the Jupyter home page open, create a new Jupyter notebook using either Python 2 or Python 3.

In the first cell, run the following code. The result should be five integers randomly sampled from 0-999, but not necessarily the same as what’s below.

That’s it! Now you can start learning and experimenting with Spark!

Starting and stopping the Docker container

Note: these commands assume you used spark as the --name when you executed the docker run command above. If you used a different --name, substitue that for spark in the commands below.

Spark Download Mac Integrate Jupiter Fl

If you want to stop the Docker container from running in the background:

Jupyter Notebook Mac Os

To start the Docker container again:

To remove the Docker container altogether:

See the Docker docs for more information on these and more Docker commands.

Using the Docker jupyter/pyspark-notebook image enables a cross-platform (Mac, Windows, and Linux) way to quickly get started with Spark code in Python. If you have a Mac and don’t want to bother with Docker, another option to quickly get started with Spark is using Homebrew and Find spark. Check out the Find spark documentation for more details.

As you can see, Docker allows you to quickly get started using Apache Spark in a Jupyter iPython Notebook, regardless of what O/S you’re running. My hope is that you can use this approach to spend less time trying to install and configure Spark, and more time learning and experimenting with it. Best of luck!